A fresh patent application published Thursday shows Apple developing advanced features for Made-for-iPhone hearing devices. The recently-published patent application describes storing and quickly selecting different configuration profiles for MFi hearing aids on the iPhone.

The Case for MFi Hearing Aids

It is no secret that people’s hearing needs can change based on the environment or task at hand. Someone who needs hearing aids might prefer more volume in one ear when driving, for example. In a theater, you may prefer your hearing aids’ microphone volume set completely differently than while walking down the street.

In years past, hearing aids have often had independent volume controls for each ear. However, as these essential devices become smaller and less obtrusive, so the available surface for audio controls shrinks. That’s where the MFi program comes into play for hearing devices.

Advanced MFi Hearing Aids Features for Different Environments

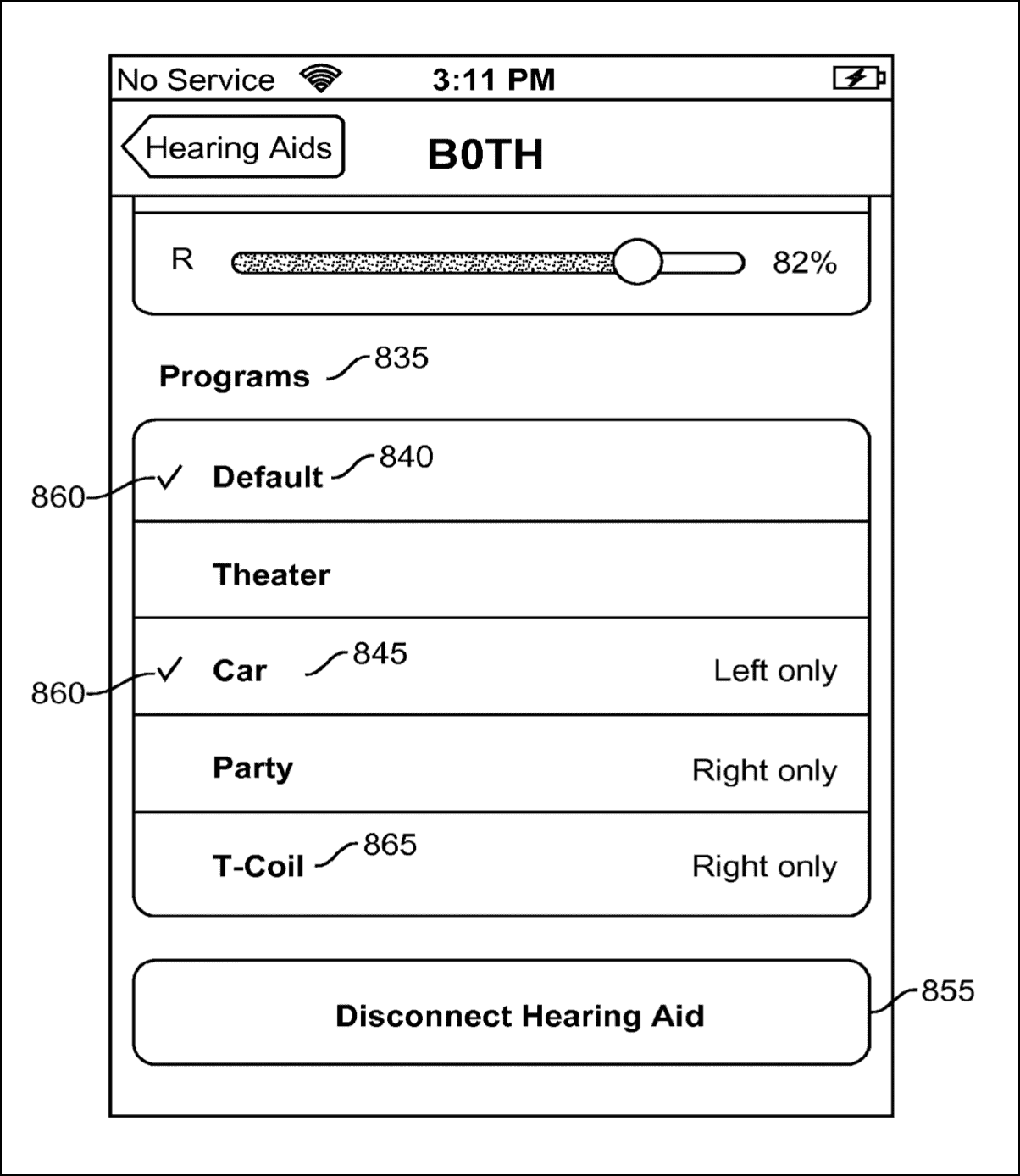

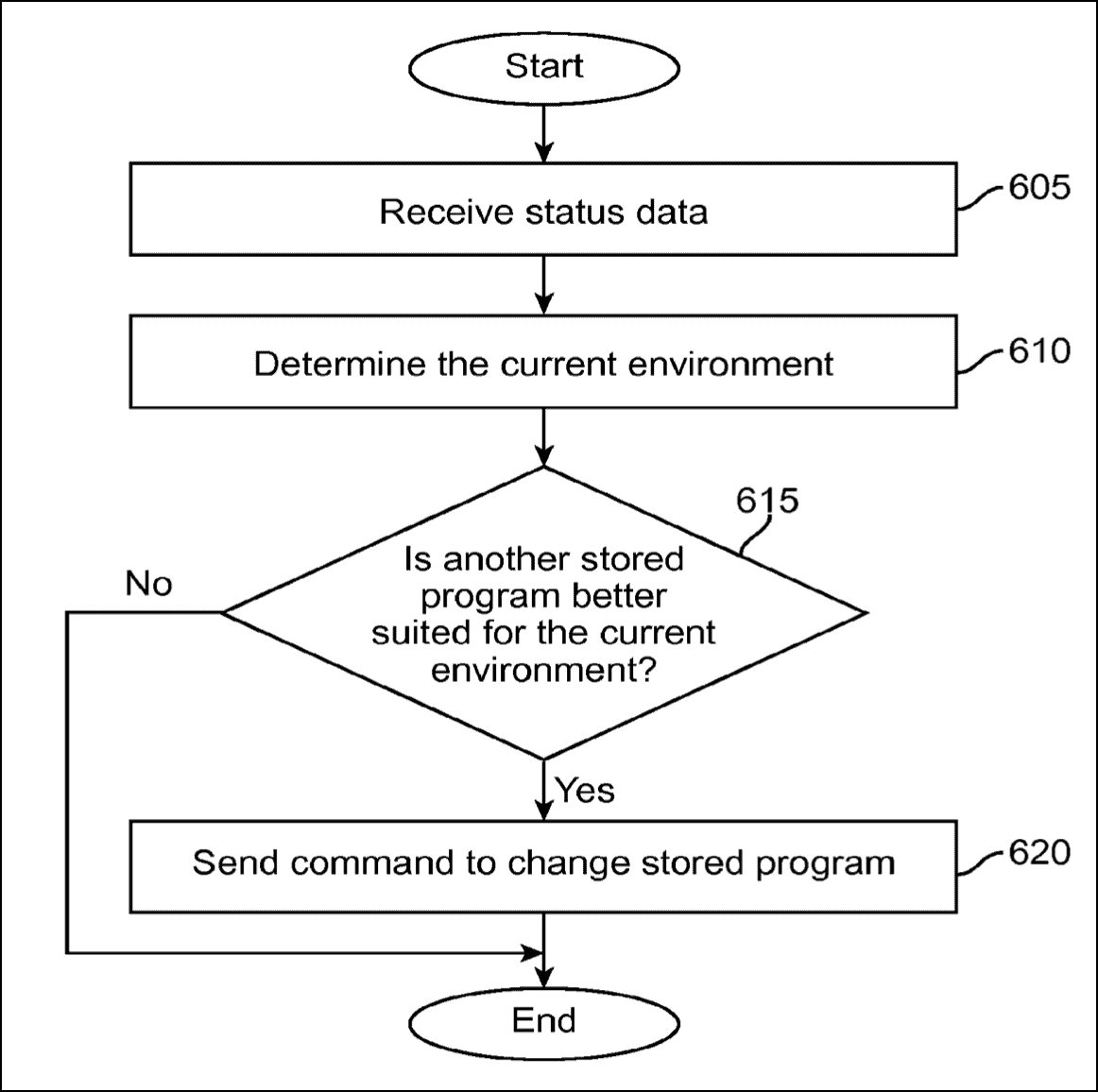

Consumers can configure presets for their MFi hearing aids in iOS, but Apple appears to be looking to take those options up a level (or three). The new patent application describes automatically choosing a preset for hearing devices based on various environments.

Specifically, it describes an iPhone automatically selecting a preset when you connect your smartphone to your car, and other modes for different environments. The iPhone would determine the current environment based on ambient noise as well as Bluetooth and other connections.

This can be determined in numerous ways. For example, the environment of the hearing device can be assumed from the location of the control device connected to the hearing device. For example, the control device can include a GPS module configured to communicate with a satellite to receive GPS location data so as to compute the geographical location of the device.

In this case, the control device would be your iPhone. Apple goes on to describe using the GPS data along with map information to “indicate that the given location is a stadium or theatre.” Similarly, iOS might figure out you’re in a restaurant. The iPhone would then automatically select the most appropriate preset for your hearing devices.

Improving Live Listen Mode to Transcribe Heard Speech

Another improvement noted in the patent application seems to apply to Apple’s Live Listen mode. This feature currently allows a user to use an iPhone, iPad, or iPod touch as a remote microphone. With recent versions of iOS and AirPods, Live Listen can even use your AirPods or AirPods Pro as the microphone. The remote microphone sends sound to your MFi hearing aid, helping you better hear conversation in a noisy room or someone speaking from a distance.

Adding to hat functionality, Apple is exploring the idea of translating the received speech from Live Listen mode and transcribing it onto a display. This way, a student could get a transcription of the lecture on a MacBook while taking notes.

Predicting Future Technology from Patent Applications

Naturally, this is only a patent application. Apple files sometimes dozens of these each week, and many of them never make it into any final product. Quite often, we will see patent applications for products that never even leave Apple’s innovation labs.

With that being said, Apple’s push for improving accessibility options within its products means this could very well be something that the iPhone maker wants to bring to market. It would certainly be another selling point for the iPhone for the Deaf community.