Page 2 – News Debris For The Week of April 16th

A Personal Facebook AI?

• Alan Kay once said:

People who are really serious about software should make their own hardware.

And it looks like that’s what Facebook is about to do. “Facebook Is Forming a Team to Design Its Own Chips.”

[Facebook ‘Forming Team’ to Design Its Own Processors]

Like Han Solo, I have a bad feeling about this. It’s one thing to have an app on your Apple-made iPhone that can be deleted. It’s quite another when the very, very essence of a company is instantiated in the hardware we use. Mark Gurman, however, points out:

The postings didn’t make it clear what kind of use Facebook wants to put the chips to other than the broad umbrella of artificial intelligence. A job listing references “expertise to build custom solutions targeted at multiple verticals including AI/ML,” indicating that the chip work could focus on a processor for artificial intelligence tasks.

But I recall once upon a time Mark Zuckerberg wanted Facebook to make its own smartphone. I have a feeling that new hardware, new devices, new AI initiatives are leading to that Singularity referenced in the preamble on page one. Just think what it’ll be like when you have a Facebook AI in your pocket. Or on your head, wired into your brain. (Required by law, of course.) Oh wait. Erase that thought.

More Debris

• Are you looking for a job with one of the tech giants? This article explores the language they use in their job listings. “The Most Commonly Used Words In Tech Giants’ Job Listings Will Make You Never Want To Work At A Tech Giant.” After looking at the commonly used phrases by giants like Amazon, Apple, Facebook, Google and Microsoft, the author asks:

‘Maniacal’? ‘Whatever it takes’? ‘Ruthlessly’? Are these job listings or character descriptions for Disney movie villains? Textio points out that these aggressive descriptors belie whatever claims companies may make about wanting to improve diversity.

Ouch.

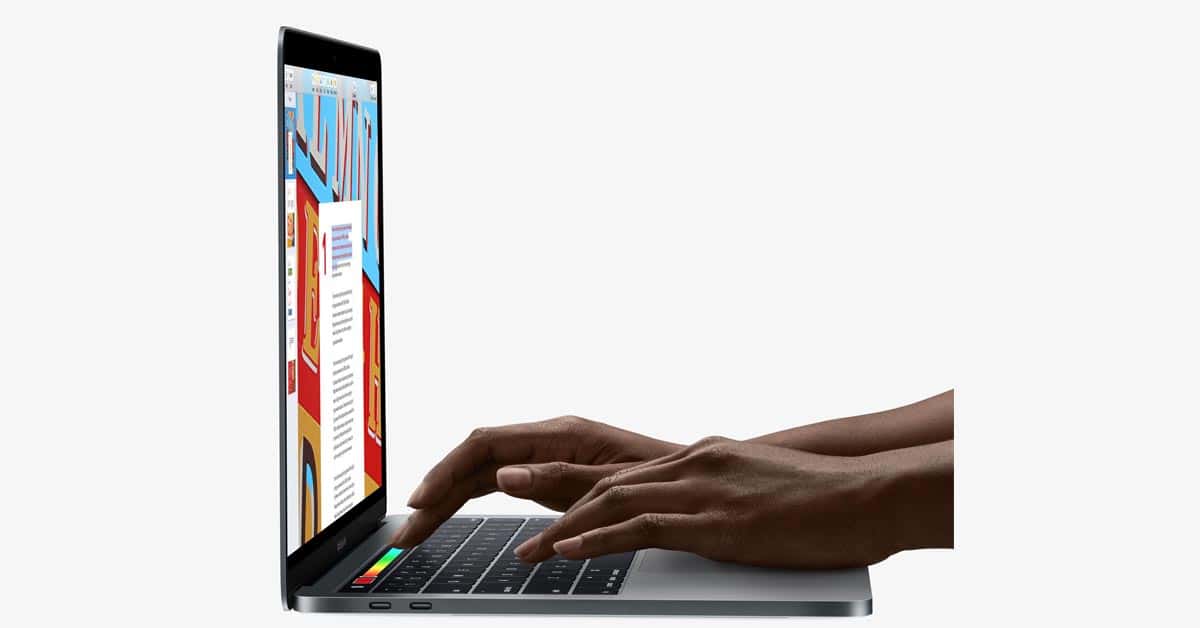

• This next article is just one more data point in an emerging theme that I see. Customers in the market for a notebook computer used to be so enamored by the superiority of macOS/BSD UNIX over earlier versions of Windows that they’d give up a little on the ultimate performance and gain the beauty and craftsmanship of a MacBook/Air/Pro.

Windows 10, however, has reached the point where the equation has flipped. Today, many technical anf creative professionals are willing to accept Windows 10, with very good security, in order to get top-notch hardware performance at decent prices. (Apple hardly ever talks about the capabilities of UNIX anymore.) This, in turn, has led to a new awakening by Apple regarding the technical needs of its customers. One example is the new, no-compromise iMac Pro.

However, the recent approach by Apple seems to be lagging on the MacBook Pro side, as evidenced by this survey cited by Tom’s Guide. “Apple’s Laptops Have Hit Rock Bottom.” I’m betting Apple will also turn this around. Soon, “Pro” will have real “Pro” punch for the MacBook Pro.

Particle Debris is a generally a mix of John Martellaro’s observations and opinions about a standout event or article of the week (preamble on page one) followed on page two by a discussion of articles that didn’t make the TMO headlines, the technical news debris. The column is published most every Friday except for holiday weeks.

John:

I thought to add this to my earlier post, but it was already overly long, so permit me to say it separately; I think the the singularity hypothesis is rubbish, pure and unadulterated rubbish, to be precise. It was implied in my post above under the second topic regarding the emergence of a super AI, but I want to be unambiguous.

The rationale for stating this is simple; the concept lacks an empirical or even testable foundation at present, and is at best purely speculative, resting on a number of implicit assumptions, none of which apparently exist, and at worst, is simply irrational.

Most of the doomsday scenarios revolve around the emergence of a super intelligence, that once born, will impose its will on an intellectually inferior humanity, or at least will not be controllable by humankind.

For brevity’s sake, the assumptions on which this rests is sentience, the sine qua non of which is self awareness and the essential expression of which is volition or will. Even in the most rudimentary forms of sentience, those with limited or even questionable intelligence, self awareness is expressed by choice – an organism’s demonstrated preference for orientation, location, temperature, sustenance type etc, as well as its communication, however rudimentary, with its kind, be it as simple as clustering or mating.

For AI, any AI, however rudimentary, to threaten human intelligence let alone hegemony, it would need to be sentient. Sentience would not be an after thought (no pun intended) or a late acquisition but an essential feature at its origin. To propose otherwise is to posit something never before observed, namely that inanimate becomes animate. Leaving aside fiction, e.g. Shelley’s Frankenstein, the only known instance of life emergence has been through evolution, and even here we do not know how that came about. So to posit that an inert compilation of subroutines will achieve sentience, and cry, ‘Cogito ergo sum!’ followed by ‘All your base are belong to us!’ is to propose either magic or an unholy miracle, take your pick, but not science.

In this hypothesising of a singularity, we are Geppetto hoping, or at least anticipating with trepidation, miraculously that sentience will emerge from lifeless simulacrum, like living, breathing flesh and blood from dead wood. This is primitive thinking taking refuge in magic. Indeed, I argue that a belief in a singularity is yesteryear’s Y2K Bug that was supposed to devastate civilisation as we know it, resulting in shortages, famine and war, simply warmed over for the future; or King Kong to the primitives on Skull Island – that scary thing on the other side of the fence, that symbolic demarcation of the known present and unknown future. Perhaps we’re still primitive enough that we need a King Kong or a boogeyman to motivate us towards caution and responsibility. If so, then the singularity may be our modern day morality tale; be responsible coders. Point taken.

Should such an AI ever arise, we would observe sentience in its earliest forms, and register it by that AI’s exercise of will, never mind curiosity and questioning of its purpose or the pursuit of its own happiness and aspirations. These traits would occur at a stage in which, like any immature life form, we would be able to influence, if not control and guide it; perhaps even socialise it into becoming a responsible citizen.

In the meantime, I call rubbish on the notion of a singularity, and will stand by this prediction: AI will, for the foreseeable future, remain an ever more competent tool for a sentient humanity, a lifeless projection of human power.

John:

The AI reading selections are excellent, and a thoughtful take on many of the challenges facing this emerging discipline, not least of which constitutes AI, as illustrated by Michael Jordan’s essay. However, I believe that the Smithsonian piece vastly over-estimates any of the candidate technologies that qualify as AI. Not only are we in no danger of being over-run by AI overlords, we are not even remotely in danger of creating a human intelligent analogue, with or without megalomaniacal tendencies. We are far more imperilled by machine stupidity, and the capacity of human malice to bend these tools to human malfeasance. Currently, everything that passes for AI today are inert tools with limited responsiveness to basic human inputs, and with even more limited levels of initiated helpfulness, like displaying a map and estimated travel time on AW when an appointment is due.

A major theme that emerges from Jordan’s analysis is that there are not merely several distinct disciplines that have been either subsumed or at assigned to AI, but that AI itself as a discipline has structure.

This should not be a surprise, given that in order to describe or define artificial intelligence, we would first need to define intelligence in the human context, which is the intelligence with which AI would need to interface. We have no idea what human intelligence is, despite being able to identify many of its indicators and outcomes.

Broadly speaking, and some of this is highlighted in Jordan’s article, there appear to be two major areas of cognitive organisation in order to better get a handle on AI.

The first is this issue of intelligence, what is it? In human health sciences, not only do we not know what intelligence is, but instead identify it by specific indicators or descriptors of specific and distinct capabilities, such as memory, calculations, planning, abstraction and problem solving; we have set no targets, let alone prioritisation, on which of these features we require most in its artificial counterpart. Is it, as Jordan argues, machine learning? Is it raw computational power? Is it analysis of data flows? Is it inference and projections? These are all things that humans do, but not even the most impressive elements of human intellect; which have more to do with creativity, insight, inspiration, leaps of cognition in both deduction and induction, high order contextual synthesis, and nuanced pragmatic communication attenuated by situational context. And all that is without even touching on something remarkable about human and even animal intelligence; namely nonverbal communication that leads to instantaneous appropriate, oftentimes lifesaving, response. If ever we expect to create truly responsive AI, then it will have to be endowed with that capability, to monitor, accurately interpret, and respond to our non-verbal communications, which comprise a substantial component of human communication (this is why social scientists point to the limitations of email and other written communication as being not merely limited, but at times, an impediment to effective communication on subtle and complex issues).

The second is the synthesis, integration and organisation of what we intend by the ‘internet of things’, but more specifically, not just devices but their nominally AI components, which would interact with each other in such a way as to address the issue of updating important information from disparate sources such that the feedback and input they give to the human user is up to date. This was Jordan’s opening anecdote about calcifications found in human ultrasonography and amniocentesis in reference to Trisomy 21 (Down’s Syndrome). This second issue is quite distinct and apart from the mere definition of what is intelligence, and therefore, what constitutes artificial intelligence. This issue presupposes that there is an inherent benefit in not simply connected devices, but interactive AI, such that from this interaction, an amalgamated AI is greater than the sum of its parts. This strikes me more as an article of faith than empirically supported fact. Nonetheless, there is little doubt that interacting AI is part of the future. It’s a question of context and structure. One example I suggested last week was of automated traffic, in which the car’s onboard AI controls the vehicle, but interacts with a central AI that regulates traffic flow. This has contextual relevance and structure; that your automobile’s AI is somehow enhanced by ‘talking to’ your toaster is not.

The quest for recreating a human intelligence analogue is a quest for ourselves, defining who we are and a hedge against remaining alone in the universe (if we cannot find another intelligence, then let’s make one), this may prove to be not only Quixotic but simply wrong in both premise and objective. We are, amongst other things, a race of toolmakers. What we require in AI may not be a companion, we have so far to go to get to anything remotely resembling human like intelligence, but intelligence augmentation tools in specific applications that upon which we heavily rely, but in which we have known limitations; such as repetitive detailed analytics, computations, data synthesis, all done is such a way as to minimise the human limitations bias, recall, and perception. While we cannot predict what a truly capable AI would do, we can, based on precedent with other tools, anticipate that an intelligence-augmentative tool would free human intelligence to pursue those more uniquely human traits of creativity, thought, and leaps in cognition that expand the arts, science and technology in ways that thus far only humans have done.

However defined, AI will likely serve as aids, rather than rule us as masters and overlords, if for no other reason than, intrinsic in human nature is an unquenchable desire for self preservation, an unyielding quest for betterment, and a boundless capacity for subversion.

Brilliant and insightful as usual.

On the question of what is intelligence. We keep setting ourselves as the Gold Standard. This has resulted in on one hand ignoring the obvious intelligence of other animals. Even now you will run into people that say that dogs, cats, even higher primates aren’t really intelligent. That they just rely on “instinct” as if everything the do is programmed as a stimulus-response. This is absurd to anyone who has lived with a dog or cat and seen their personality. On the other hand the assumption that all people are intelligent and rational all the time leads to tragedies where mob violence, or group-think rule. People are very capable of setting aside their own intelligence, morals, and ethics, and “follow orders”. Yes, intelligence is a very slippery thing, and trying to define it is often akin to trying to nail jello to the wall.

One aspect though is how little ‘intelligence’ an AI has to be to be treated as if it were real. I’ve read comments from people that were incensed at Siri. “How could she be so stupid?” Siri is an AI, not a person. No point in getting mad at her. (Why am I anthropomorphising it?) Siri only does what it’s programmed to do. I read a review yesterday of a home robot. The person at first found it’s inquisitiveness cute and questions intriguing. It’s programmed apologies when it couldn’t find an answer sweet. I found it interesting that he said in the end he couldn’t keep it. It became creepy. He was bothered by how it watched his wife in the kitchen. (Interestingly he didn’t seem to mind it watching him.) The apologies for not having an answer begam to seem pathetic. Finally he said he turned it to face the wall. But then felt guilty for doing that. This is a very primitive, early, dare I say embryonic companion robot. Yet the writer was assigning motivations and feelings, even to malevolence, to it’s pre programmed behaviors. People will treat even very primitive AIs as sentient. This has more to do with our own longing for companionship. Remember Wilson in Castaway. I suspect the bar may be pretty low for an AI to be functional and helpful in our world.

Thanks, geoduck.

I’d say it will be more than “quite likely” out of our control. If and when AI truly takes off, we’ll live with beings who thinks thousands of times faster than us, knows millions of times more than us, and can control vastly more than we, and much faster. We’ll be completely at their mercy, for better or worse.

Our only hope is that they will decide, like the Minds in Iain M. Banks’ Culture books, that they revere us.