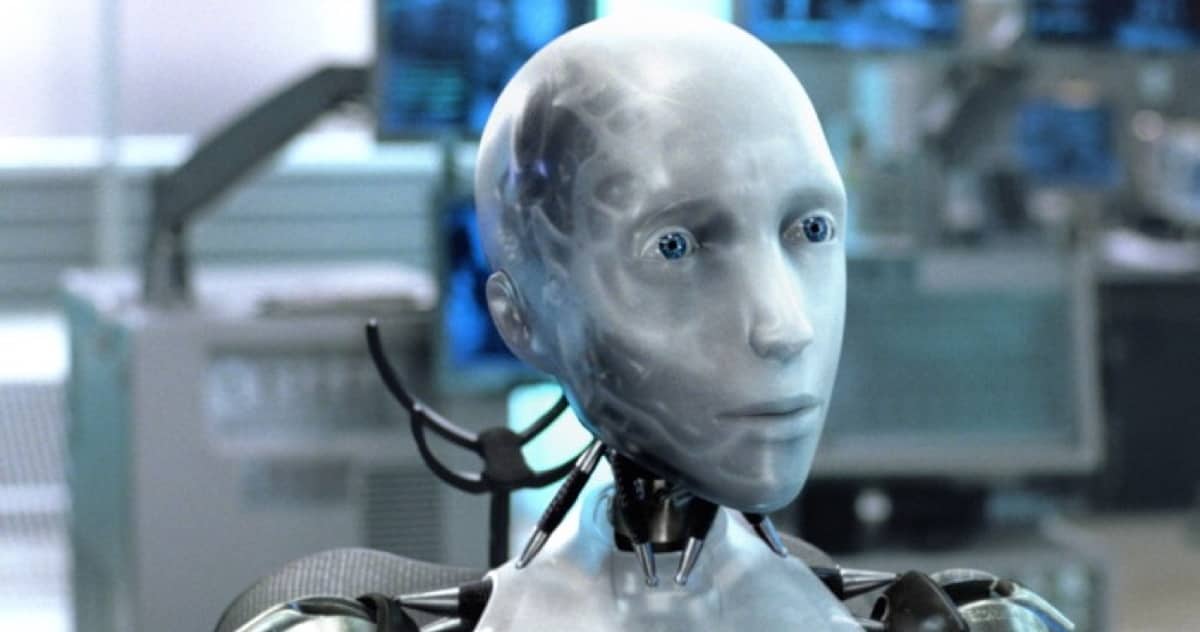

From boingboing: “‘Under what circumstances and to what extent would adults be willing to sacrifice robots to save human lives?’ That was the question posed by researchers at Radboud University in Nijmegen in the Netherlands and Ludwig-Maximilians-Universitaet (LMU) in Munich.” The results have implications for how we’ll design robots with apparent human feelings.

Check It Out: How Far Would You Go to Protect a Robot?

The question is wrong, how far should a robot go to protect a human?

Robots are machines, they might be able to ‘think’ for themselves using AI, and have ‘feelings’ based on Machine Learning, but that doesn’t mean robots are less than disposable machines – it also means the body of their knowledge can be captured and dumped to external storage – in whatever form that might take.

Have YOU tried programming one? its hard.

An interesting one for the Ethics Committee nevertheless HAL.