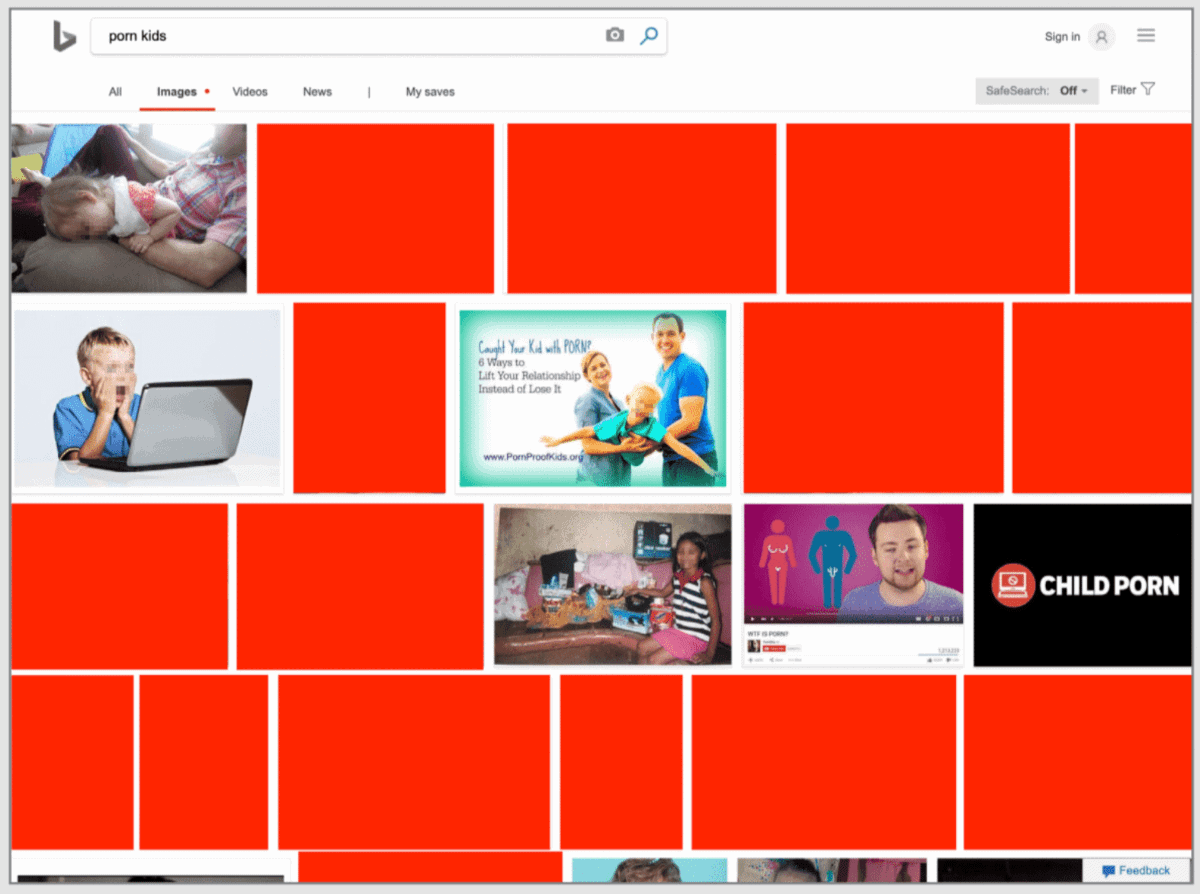

A disturbing report from TechCrunch finds that not only is it possible to find child porn via Microsoft Bing, but the search engine also can suggest it.

[Should Apple Remove WhatsApp For Child Porn?]

NSFW Bing

According to popular internet culture, Bing is a great search engine for porn. But it seems that “porn” isn’t limited to adults. Researchers found that certain search terms surface illegal images of children. And even if you didn’t explicitly search for it, it seems Bing could still end up suggesting it.

Bing’s machine learning algorithms offers suggestions on what to search next, based on what you’re searching for at the moment. This includes auto-completed search terms. While searching for images, you could click on an auto-completed CP term, then in the Similar Images section, it would surface similar searches related to that.

The researchers further discovered that similar searches using Google didn’t offer up as much illegal photos as Bing did. AntiToxin CEO Zohar Levkovitz said,

Speaking as a parent, we should expect responsible technology companies to double, and even triple-down to ensure they are not adding toxicity to an already perilous online environment for children. And as the CEO of AntiToxin Technologies, I want to make it clear that we will be on the beck and call to help any company that makes this its priority.

[Bing Archives – The Mac Observer]

Andrew:

As one of your previous linked articles pointed out, algorithms (machines and machine learning) have no values, and unless parameters are inbuilt, will not mimic or respect human cultural sensitivities. This is a case in point, and underscores the continued requirement for some degree of human oversight, even if it is simply a matter of random spot checks, as we do for other disciplines in data collection/analysis.

Better still, a professional grade monitoring and evaluation protocol, even done by machine, can provide outputs (performance indicators) to a human monitor and thereby proactively identify unwanted outcomes of algorithmic performance.

I cannot understand why this is not being done by a company with the enterprise focus and resources of MS.

I agree about algorithmic oversight. A part of the irony in this case is that Microsoft built PhotoDNA, a tool used to help identify child abuse images online. Why they didn’t turn that inward is odd.