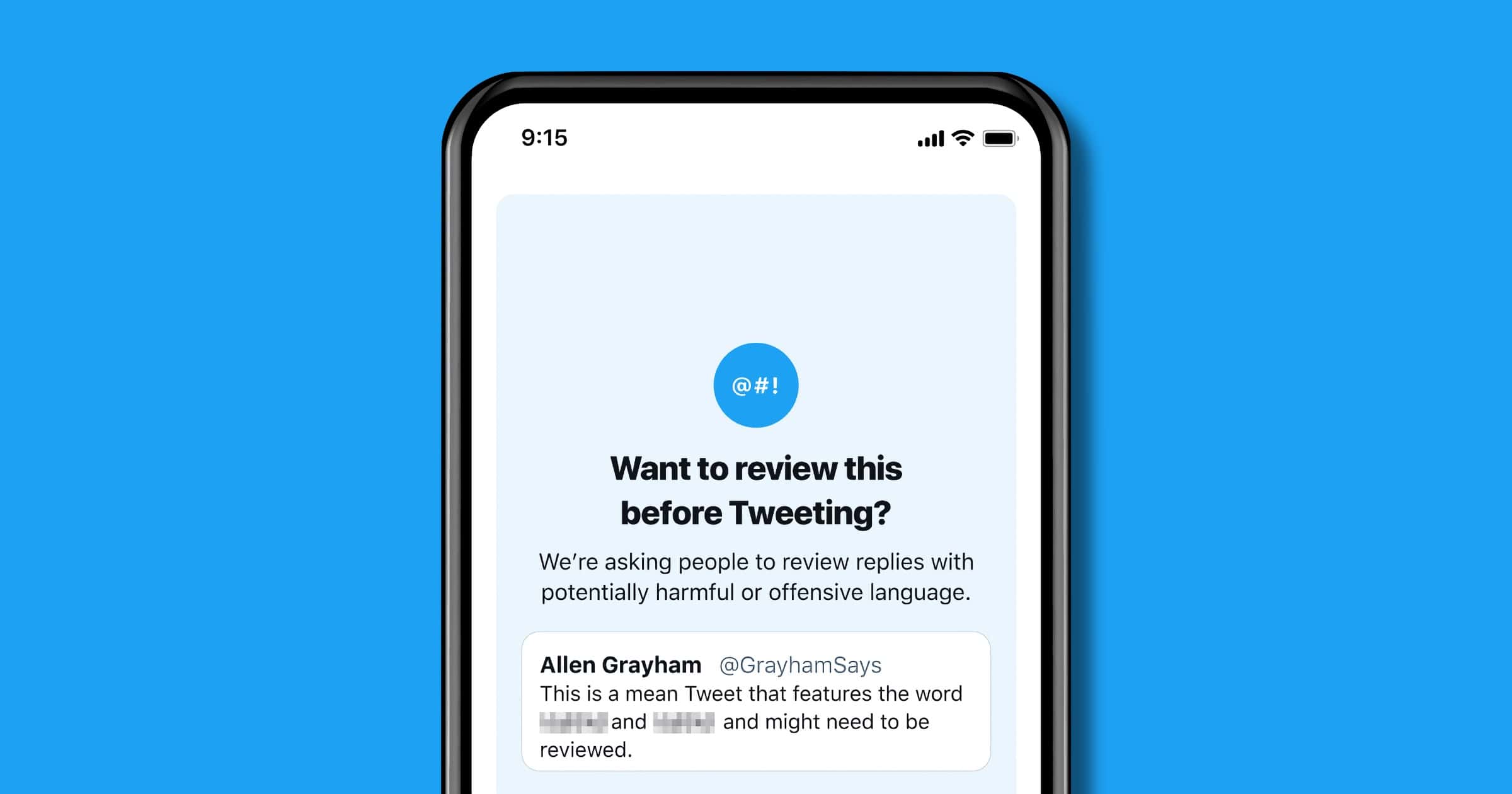

On Wednesday Twitter announced that it will roll out a feature for English-language users on iOS and Android about their tweets. If the app detects a reply to a tweet with “potentially harmful for abusive language” users will be asked to review it.

Reviewing Tweets

A screen will appear with the content of the message, and users will have the option to edit, delete, or go ahead and tweet.

In 2020 the company first tested prompts that encouraged people to pause and reconsider a potentially harmful or offensive reply before they hit send. Based on testing and feedback Twitter is officially releasing it. Here is what the company learned:

- If prompted, 34% of people revised their initial reply or decided to not send their reply at all.

- After being prompted once, people composed, on average, 11% fewer offensive replies in the future.

- If prompted, people were less likely to receive offensive and harmful replies back.

The company says it will continue to explore and encourage “healthier conversations” on its platform.