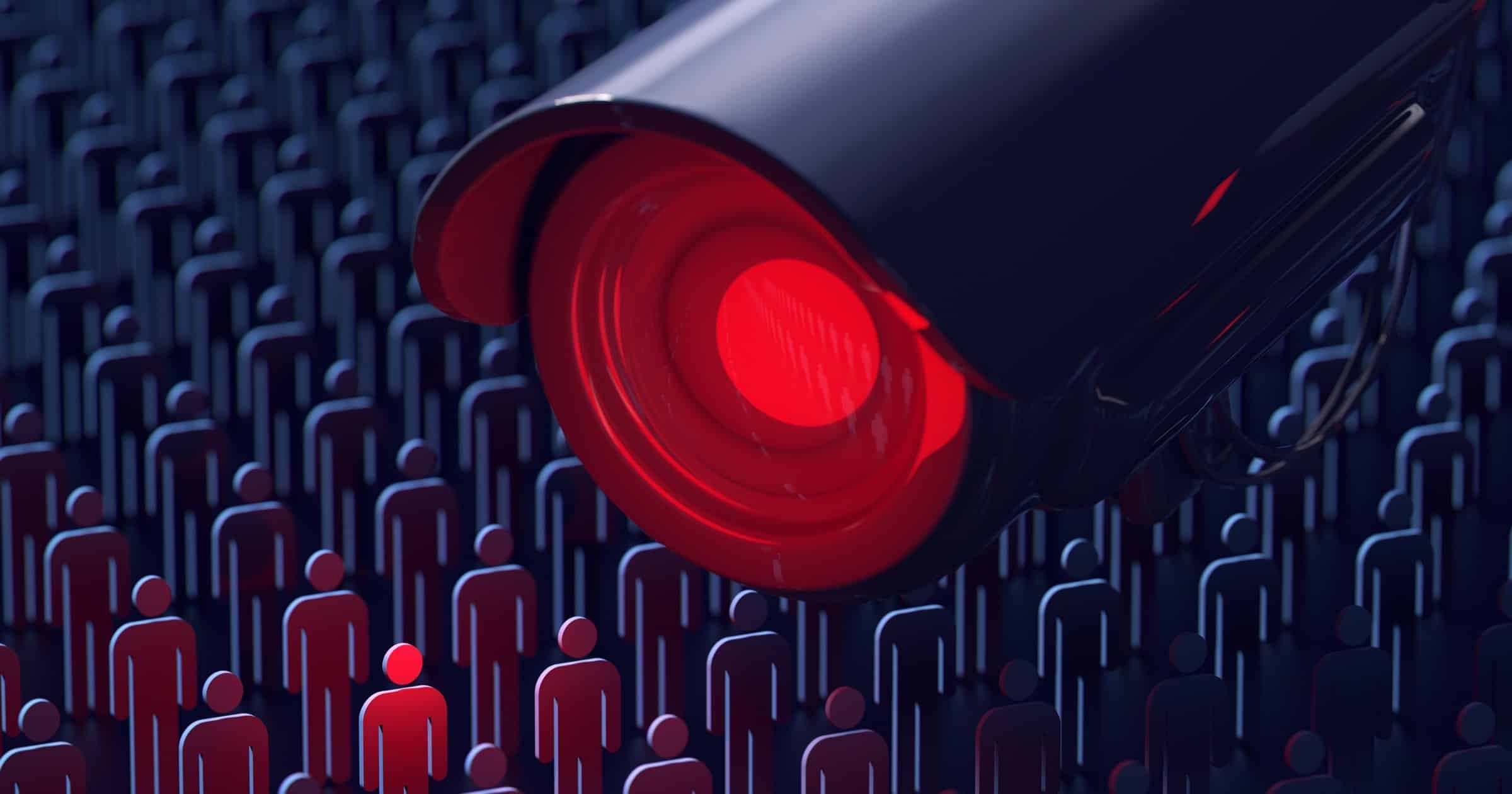

This week we discovered that Apple plans to localize its scanning efforts to detect child sexual abuse material. The move has been widely criticized and the Electronic Frontier Foundation has shared its statement on the matter.

All it would take to widen the narrow backdoor that Apple is building is an expansion of the machine learning parameters to look for additional types of content, or a tweak of the configuration flags to scan, not just children’s, but anyone’s accounts. That’s not a slippery slope; that’s a fully built system just waiting for external pressure to make the slightest change.