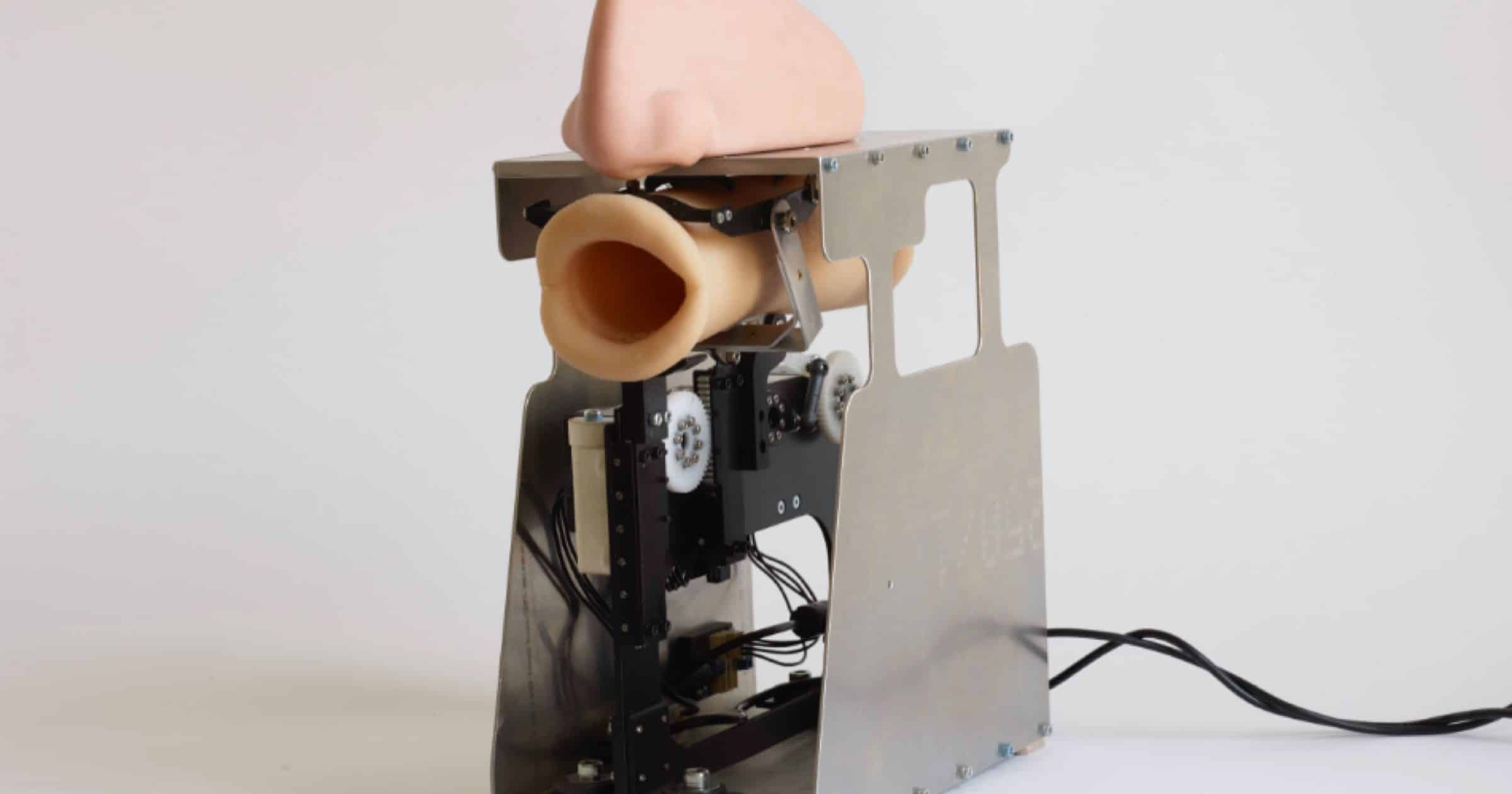

Artist Diemut Strebe built a praying robot “to explore the possibilities of an approximation to celestial and numinous entities by performing a potentially never-ending chain of religious routines and devotional attempts for communication through a self-learning software.” The production is a collaboration with Regina Barzilay, Tianxiao Shen, Enrico Santus, all MIT CSAIL, Amazon Polly, Bill and Will Sturgeon, Elchanan Mossel, MIT, Stefan Strauss, Chris Fitch, Brian Kane, Keith Welsh, Webster University, Matthew Azevedo. “Wretched sinner unit! The path to robot heaven lies here… in the Good Book 3.0.” ―Lionel Preacherbot

artificial intelligence

The Singularity: Can Computers Make Themselves Smarter?

Writing for The New Yorker, Ted Chiang believes that the concept of a technological singularity, in which computers / AI would be able to make themselves ever smarter, is similar to an ontological argument. In other words, it probably won’t happen.

How much can you optimize for generality? To what extent can you simultaneously optimize a system for every possible situation, including situations never encountered before? Presumably, some improvement is possible, but the idea of an intelligence explosion implies that there is essentially no limit to the extent of optimization that can be achieved.

Skylum Releases ‘Luminar AI’ Photo Editor for $79 on Mac

Known for products like Aurora HDR and Luminar, Skylum has launched its latest photo editor called Luminar AI.

Robot Lawyer ‘DoNotPay’ Adds Tax Fraud to its Repertoire

DoNotPay is a machine learning service that provides a variety of services like canceling free trials, appealing parking tickets, and more.

Apple Buys AI Video Company ‘Vilynx’

Apple has acquired Vilynx Inc., a company based on Barcelona that specialized in AI and computer vision technology for videos.

Delve Into the ‘AI Dungeon’ for Text-Based Adventures

AI Dungeon is a text-based adventure game where the adventures are generated on-the-fly by machine learning. This means there are a near-infinite amount of adventures you can play. It’s available on the web and as an app for Android and iOS. There are two worlds you can play in: Xaxas (shown above) and Kedar (shown below). Xaxas is a world of peace and prosperity. It is a land in which all races live together in harmony. Kedar is a world of dragons, demons, and monsters. But there are other variations of AI Dungeon, like choosing a theme, playing multiplayer, or not choosing a world.

China Would Rather TikTok Be Shut Down Than Sold

A report on Friday says that China would rather TikTok be shut down instead of being sold to a U.S. company.

However, Chinese officials believe a forced sale would make both ByteDance and China appear weak in the face of pressure from Washington, the sources said, speaking on condition of anonymity given the sensitivity of the situation.

ByteDance said in a statement to Reuters that the Chinese government had never suggested to it that it should shut down TikTok in the United States or in any other markets.

Here’s what I think this means. China is all about the AI, and based on reports its algorithms seem to be more advanced than even invasive Facebook. China doesn’t want the U.S. to know just how more advanced it’s algorithms are. Read: China export ban of such technology.

Apple AI/ML Residency Program Invites Experts to Collaborate

Apple has launched an AI/ML residency program that invites experts to build machine learning and AI powered products and experiences.

AI Company ‘Cense AI’ Leaks 2.5 Million Medical Records

Secure Thoughts worked with security researcher Jeremiah Fowler to uncover how Cense AI leaked 2.5 million medical records, which included names, insurance records, medical diagnosis notes, and a lot more.

The records were labeled as staging data and we can only speculate that this was a storage repository intended to hold the data temporarily while it is loaded into the AI Bot or Cense’s management system. As soon as I could validate the data, I sent a responsible disclosure notice. Shortly after my notification was sent to Cense I saw that public access to the database was restricted.

1: Burn this company down. 2: Sounds like most of the data are from patients in New York.

‘Hybri’ Can Create a Virtual Companion Based on Real People

A company called Hybri is creating virtual AI companions that live in augmented and virtual reality. But a feature that may prove to be controversial is letting users scan a photo of a real person to superimpose on the avatars.

But the creepiest feature of Hybri is its Photoscan, which allows you to add a real person’s face to the avatar. That means your unrequited love or celebrity crush could soon become your virtual partner — whether they want to or not.

It sounds like a cool idea to me, but it probably won’t pass the App Store review team.

Apple Acquires Machine Learning Startup Inductiv to Improve Data Siri Uses

Apple has acquired machine learning startup Inductiv, Inc to improve Siri, machine learning, and Apple’s data science endeavors.

Apple Acquires Irish AI Startup ‘Voysis’

Apple has acquired an AI startup called Voysis, which could be used to enhance Siri’s commerce capabilities.

AI Could Build the Next JPEG Image Codec

The Joint Photographic Experts Group (JPEG) is exploring methods to use machine learning to create the next JPEG image codec.

In a recent meeting held in Sydney, the group released a call for evidence to explore AI-based methods to find a new image compression codec. The program, aptly named JPEG AI, was launched last year; with a special group to study neural-network-based image codecs.

AI vs. Machine Learning, Thoughts on Our New Macs, Oak Island - ACM 525

Bryan Chaffin and John Kheit discuss the difference between artificial intelligence (AI) and machine learning, including the state of both today. They also talk about their new Macs— John got a new 28-core Mac Pro, while Bryan has a new iMac—and whether or not they like their new purchases. The cap the show by catching up on The Curse of Oak Island TV show on History.

PETA Wants to Replace Punxsutawney Phil With AI

Animal rights group PETA wants to replace famous groundhog Punxsutawney Phil with an animatronic AI.

The way the group sees it, not only would an AI be better at estimating when the winter will end, but it would also attract an entirely new generation of visitors to the western Pennsylvanian town. “Today’s young people are born into a world of terabytes, and to them, watching a nocturnal rodent being pulled from a fake hole isn’t even worthy of a text message,” Newkirk said. “Ignoring the nation’s fast-changing demographics might well prove the end of Groundhog Day.”

Apple Cancels Xnor.ai Pentagon Contract With Project Maven

Shortly after acquiring AI company Xnor.ai, Apple canceled its contract with Project Maven that would use algorithms to analyze military drone imagery.

Clearview AI Helps Law Enforcement With Facial Recognition

In a long read from NYT, Kashmir Hill writes about a startup called Clearview AI that works with law enforcement on facial recognition.

You take a picture of a person, upload it and get to see public photos of that person, along with links to where those photos appeared. The system — whose backbone is a database of more than three billion images that Clearview claims to have scraped from Facebook, YouTube, Venmo and millions of other websites — goes far beyond anything ever constructed by the United States government or Silicon Valley giants.

Apple Acquires AI Company Xnor.ai for Image Recognition Tools

Apple acquired artificial intelligence company Xnor.ai, which specializes in “low-power, edge-base tools” like image recognition.

Inside Big Tech’s Manipulation of AI Ethics Research

It’s a long read, but Rodrigo Ochigame, former AI researcher at MIT’s Media Lab, examined Big Tech’s negative role in AI ethics research.

MIT lent credibility to the idea that big tech could police its own use of artificial intelligence at a time when the industry faced increasing criticism and calls for legal regulation…Meanwhile, corporations have tried to shift the discussion to focus on voluntary “ethical principles,” “responsible practices,” and technical adjustments or “safeguards” framed in terms of “bias” and “fairness” (e.g., requiring or encouraging police to adopt “unbiased” or “fair” facial recognition).

The AI 'AlphaStar' Becomes a Grandmaster in StarCraft II

DeepMind’s AlphaStar AI has recently become a Grandmaster in the game StarCraft II.

StarCraft requires players to gather resources, build dozens of military units, and use them to try to destroy their opponents. StarCraft is particularly challenging for an AI because players must carry out long-term plans over several minutes of gameplay, tweaking them on the fly in the face of enemy counterattacks. DeepMind says that prior to its own effort, no one had come close to designing a StarCraft AI as good as the best human players.

Want to Help Train AI? Send This Company Pictures of Your Poop

A company called Seed wants to build a database of 100,000 poop photos so an AI can learn to tell the difference between healthy and unhealthy poop.

Ara Katz, co-founder and co-CEO of Seed, hopes that the poop project is just one of the company’s many future contributions to our understanding of health. “It’s projects like this [that] allow people who are not scientists to participate in citizen science. By crowdsourcing data, we can help researchers and technologies like auggi in order to help people identify different conditions.”

Take a poop pic and submit it at seed.com/poop.

TV+ Pricing, Microsoft's AI Hire – TMO Daily Observations 2019-08-20

John Martellaro and Charlotte Henry join host Kelly Guimont to talk about the leaked TV+ Pricing and the latest AI hire at Microsoft.

AI Tech Like Neuralink Could be 'Suicide For the Human Mind'

Some scientists are worried about technology like Elon Musk’s Neuralink. Cognitive psychologist Susan Schneider wrote an op-ed (paywall) that it could be “suicide for the human mind.”

The worry with a general merger with AI, in the more radical sense that Musk envisions, is the human brain is diminished or destroyed. Furthermore, the self may depend on the brain and if the self’s survival over time requires that there be some sort of continuity in our lives — a continuity of memory and personality traits — radical changes may break the needed continuity.

I’m no neuroscientist but I subscribe to emergentism, which is the idea that consciousness is an emergent property of the brain. An easy explanation is here, but basically it means that consciousness isn’t a property of the physical brain, but rather something that happens when you get enough neurons interconnected. This isn’t something that could be replicated with code.

Apple Glasses Could be the Company’s Next Health Device

Apple Glasses that use augmented reality have a lot of potential, like gaming and Apple Maps directions. What if health could be another feature?