Learn why the iCloud Shared Album issues occur. List of easy fixes for Shared Album not appearing and invite link not working issues.

iCloud Photos

How to Transfer Photos from iCloud to Google Photos

Moving photos from one service to another can be a tedious task. But Apple made it easier to transfer photos from iCloud to Google Photos.

How to Fix 'Cannot Download Photo from iCloud' iPhone Error

Here are some troubleshooting tips to fix the “cannot download photo from iCloud” on your iPhone if it appears on your device.

How to Look at Photos on iCloud and More

Find out how to look at photos in iCloud, navigate the iCloud website and other important things you need to know about this service.

How to Share iCloud Storage Using iPhone, iPad or Mac

Subscribing to a premium iCloud storage plan is worth it if you know how to share iCloud storage with your friends and even friends.

How to Delete Photos from iPhone But Not iCloud

Take lots of photos and videos but want them off your iPhone? There are a few ways to delete photos from your iPhone but not iCloud.

Apple Cancels Plans for CSAM Detection Tool for iCloud Photos

Apple is abandoning its planned CSAM detection tool for iCloud photos but will refocus on its communications safety feature instead.

Microsoft Adds iCloud Photos Integration to Windows 11

Microsoft’s promised integration of iCloud Photos into Windows 11’s Photos app is now available for download.

Set Up and Use iCloud Shared Photo Library

With the launch of iS 16.1, Apple’s new iCloud Shared Photo Library is available to use. Here’s how to get started and make the most of it.

Microsoft Announces Apple Music and Apple TV Apps for Windows Plus iCloud Integration With Windows 11 Photos

Microsoft announced upcoming Apple Music and Apple TV apps for Windows, as well as new integration between iCloud Phots and Windows Photos.

Apple Removes Mention of CSAM Detection on Child Safety Page, Code Remains in iOS

Apple has removed any mention of its controversial CSAM detection plans in iCloud Photos, although the code remains in iOS.

Your Mac's Smart Photo Import Feature

Try this Smart Import Feature on your Mac to keep your photos organized in a folder structure. Learn More in this Youtube Video from MacMost, then watch Mac Geek Gab 895 for more tips just like this one!

AdGuard: 'People Should be Worried About Apple CSAM Detection'

Adblocking company AdGuard is the latest to offer commentary on Apple’s controversial decision to detect CSAM in iCloud Photos. The team ponders ways to block it using their AdGuard DNS technology.

We consider preventing uploading the safety voucher to iCloud and blocking CSAM detection within AdGuard DNS. How can it be done? It depends on the way CSAM detection is implemented, and before we understand it in details, we can promise nothing particular.

Who knows what this base can turn into if Apple starts cooperating with some third parties? The base goes in, the voucher goes out. Each of the processes can be obstructed, but right now we are not ready to claim which solution is better and whether it can be easily incorporated into AdGuard DNS. Research and testing are required.

Scammer Stole Over 620,000 iCloud Photos Looking for Nudes

Hao Kuo Chi, 40, of La Puente, has agreed to plead guilty to four felonies, including conspiracy to gain unauthorized access to a computer.

Tell Apple You Oppose iOS 15 CSAM Detection With This Petition

Apple’s recent announcement that it will add a CSAM detection system to devices has angered many. Fight For The Future created a petition.

Corellium Will Award Researchers to Examine Apple CSAM Scanning Claims

On Tuesday Corellium announced the launch of the Corellium Open Security Initiative. It will support independent public research of mobile security.

Apple Now Scans Uploaded Content for Child Abuse Imagery (Update)

Andrew noticed something new in Apple’s privacy policy, last updated May 9, 2019. Apple now scans uploaded content for child abuse content.

Apple Posts FAQ About its CSAM Scanning in iCloud Photos

With iOS 15 Apple will add a system to detect examples of child abuse that get uploaded to iCloud Photos. They now have an FAQ to explain it.

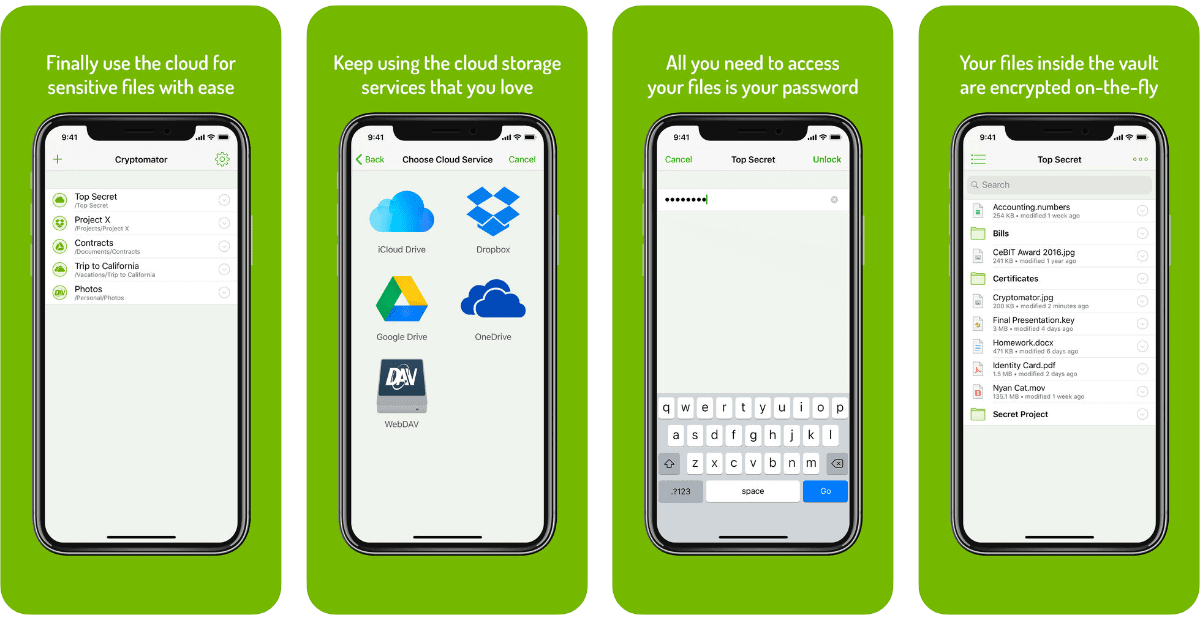

4 Alternatives to iCloud Photos That Don’t Scan Your Content

Does the thought of your Apple device scanning your iCloud Photos make you uneasy? Good news! Here are four private alternatives.

EFF Shares Statement on Apple Scanning for Illegal Content

This week we discovered that Apple plans to localize its scanning efforts to detect child sexual abuse material. The move has been widely criticized and the Electronic Frontier Foundation has shared its statement on the matter.

All it would take to widen the narrow backdoor that Apple is building is an expansion of the machine learning parameters to look for additional types of content, or a tweak of the configuration flags to scan, not just children’s, but anyone’s accounts. That’s not a slippery slope; that’s a fully built system just waiting for external pressure to make the slightest change.

Apple Expands Child Safety Across Messages, Photos, and Siri

Apple is expanding its efforts to combat child sexual abuse material (CSAM) across its platform. Content scanning in Messages, iCloud Photos, and Siri.

Notes, Photos, and Reminders Now Appear on Mobile iCloud.com

Now that you can log onto iCloud.com via an iOS device, you can access some apps, like Apple Notes, Reminders, Photos, and Find My.

WWDC19: 5 iOS 13 Photo Features Apple Announced

iOS 13 brings a lot of new updates, and the Photos app is getting some big new features. Here are all of the iOS 13 photo features coming.