A speech Steve Jobs delivered to a group of MIT students is still as relevant today as it was in the spring of 1992.

MIT

'TinyML' Wants to Bring Machine Learning to Microcontroller Chips

TinyML is a joint project between IBM and MIT. It’s a machine learning project capable of running and low-memory and low-power microcontrollers.

[Microcontrollers] have a small CPU, are limited to a few hundred kilobytes of low-power memory (SRAM) and a few megabytes of storage, and don’t have any networking gear. They mostly don’t have a mains electricity source and must run on cell and coin batteries for years. Therefore, fitting deep learning models on MCUs can open the way for many applications.

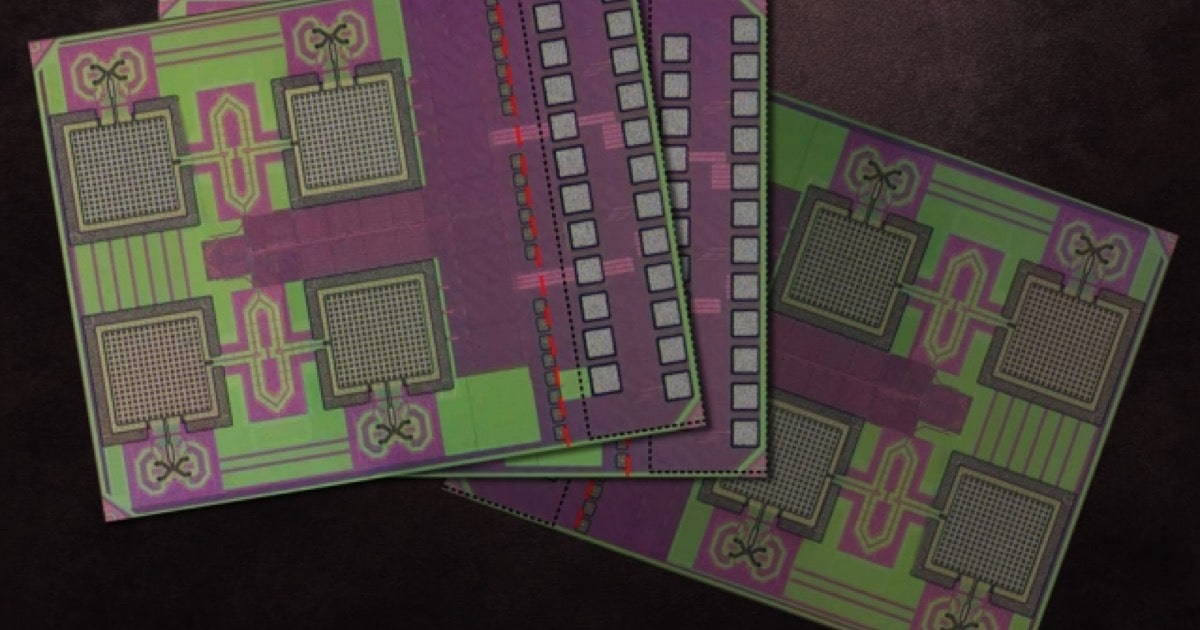

These Tiny Chips Could Help Stop Counterfeits

MIT researchers created tiny (0.002 square inches) chips that could help combat supply chain counterfeiting.

It’s millimeter-sized and runs on relatively low levels of power supplied by photovoltaic diodes. It also transmits data at far ranges, using a power-free “backscatter” technique that operates at a frequency hundreds of times higher than RFIDs. Algorithm optimization techniques also enable the chip to run a popular cryptography scheme that guarantees secure communications using extremely low energy.

Sounds interesting. I wonder if these could be used for more than counterfeits.

Featured Image credit: MIT News

Scientists Can Make Neural Networks 90% Smaller

Researchers from MIT found a way to create neural networks that are 90% smaller but just as smart.

In a new paper, researchers from MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) have shown that neural networks contain subnetworks that are up to one-tenth the size yet capable of being trained to make equally accurate predictions — and sometimes can learn to do so even faster than the originals.

This article stood out to me because if neural networks can be smaller but just as smart, maybe it could encourage companies to keep machine learning locally on a device, like Apple does.

Belgian Programmer Solves 20 Year Old Crypto Puzzle

In 1999, MIT created a puzzle designed to take 35 years to solve. Belgian programmer Bernard Fabrot has solved it early.

The puzzle essentially involves doing roughly 80 trillion successive squarings of a starting number, and was specifically designed to foil anyone trying to solve it more quickly by using parallel computing.

“There have been hardware and software advances beyond what I predicted in 1999,” says MIT professor Ron Rivest, who first announced the puzzle in April 1999. “The puzzle’s fundamental challenge of doing roughly 80 trillion squarings remains unbroken, but the resources required to do a single squaring have been reduced by much more than I predicted.”