When Apple launched the A11 Bionic chip in 2017, it introduced us to a new type of processor, the Neural Engine. The Cupertino-based tech giant promised this new chip would power the algorithms that recognize your face to unlock the iPhone, transfer your facial expressions onto animated emoji and more.

Since then, the Neural Engine has become even more capable, powerful and faster. Even so, many people wonder what precisely the Neural Engine is and what it does. Let’s dive in and see what this chip dedicated to AI and machine learning can do.

Apple Neural Engine Kicked Off the Future of AI and More

Apple’s Neural Engine is Cupertino’s name for its own neural processing unit (NPU). Such processors are also known as Deep Learning Processors, and handle the algorithms behind AI, augmented reality and machine learning (ML).

The Neural Engine allows Apple to offload certain tasks that the central processing unit (CPU) or graphics processing unit (GPU) used handle.

You see, an NPU can (and is designed to) handle specific tasks much faster and more efficiently than more generalized processors can.

In the early days of AI and machine learning, almost all of the processing fell to the CPU. Later, engineers tasked the GPU to help. These chips aren’t designed with the specific needs of AI or ML algorithms in mind, though.

Enter the NPU

That’s why engineers came up with NPUs such as Apple’s Neural Engine. Engineers design these custom chips specifically to accelerate AI and ML tasks. Whereas manufacturers design a GPU to accelerate graphics tasks, the Neural Engine boosts neural network operations.

Apple, of course, isn’t the only tech company to design neural processing units. Google has its TPU, or Tensor Processing Unit. Samsung has designed its own version of the NPU, as have Intel, Nvidia, Amazon and more.

To put it into perspective, an NPU can accelerate ML computational tasks by as much as 10,000 times the speed of a GPU. They also consume less power in the process, meaning they are both more powerful and more efficient than a GPU for the same job.

Tasks the Apple Neural Engine Takes Responsibility For

It’s time to dive into just what sort of jobs the Neural Engine takes care of. As previously mentioned, every time you use Face ID to unlock your iPhone or iPad, your device uses the Neural Engine. When you send an animated Memoji message, the Neural Engine is interpreting your facial expressions.

That’s just the beginning, though. Cupertino also employs its Neural Engine to help Siri better understand your voice. In the Photos app, when you search for images of a dog, your iPhone does so with ML (hence the Neural Engine.)

Initially, the Neural Engine was off-limits to third-party developers. It couldn’t be used outside of Apple’s own software. In 2018, though, Cupertino released the CoreML API to developers in iOS 11. That’s when things got interesting.

The CoreML API allowed developers to start taking advantage of the Neural Engine. Today, developers can use CoreML to analyze video or classify images and sounds. It’s even able to analyze and classify objects, actions and drawings.

History of Apple’s Neural Engine

Since it first announced the Neural Engine in 2017, Apple has steadily (and exponentially) made the chip more efficient and powerful. The first iteration had two neural cores and could process up to 600 billion operations per second.

The latest M2 Pro and M2 Max SoCs take that capability much, much farther. The Neural Engine in these SoCs have 16 neural cores. They can grind through up to 15.8 trillion operations every second.

| SoC | Introduced | Process | Neural Cores | Peak Ops/Sec. |

|---|---|---|---|---|

| Apple A11 | Sept. 2017 | 10nm | 2 | 600 billion |

| Apple A12 | Sept. 2018 | 7nm | 8 | 5 trillion |

| Apple A13 | Sept. 2019 | 7nm | 8 | 6 trillion |

| Apple A14 | Oct. 2020 | 5nm | 16 | 11 trillion |

| Apple M1 | Nov. 2020 | 5nm | 16 | 11 trillion |

| Apple A15 | Sept. 2021 | 5nm | 16 | 15.8 trillion |

| Apple M1 Pro/Max | Oct. 2021 | 5nm | 16 | 11 trillion |

| Apple M1 Ultra | March 2022 | 5nm | 32 | 22 trillion |

| Apple M2 | June 2022 | 5nm | 16 | 15.8 trillion |

| Apple A16 | Sept. 2022 | 4nm | 16 | 17 trillion |

| Apple M2 Pro/Max | Jan. 2023 | 5nm | 16 | 15.8 trillion |

Apple has truly molded the iPhone, and now the Mac, experience around its Neural Engine. When your iPhone reads the text in your photos, it’s using the Neural Engine. As Siri figures out you almost always run a specific app at a certain time of the day, that’s the Neural Engine at work.

Since first introducing the Neural Engine, Apple has even embedded its NPU in a task as seemingly mundane as photography. When Apple refers to the image processor making a certain number of calculations or decisions per photo, the Neural Engine is at play.

The Foundation of Apple’s Newest Innovations

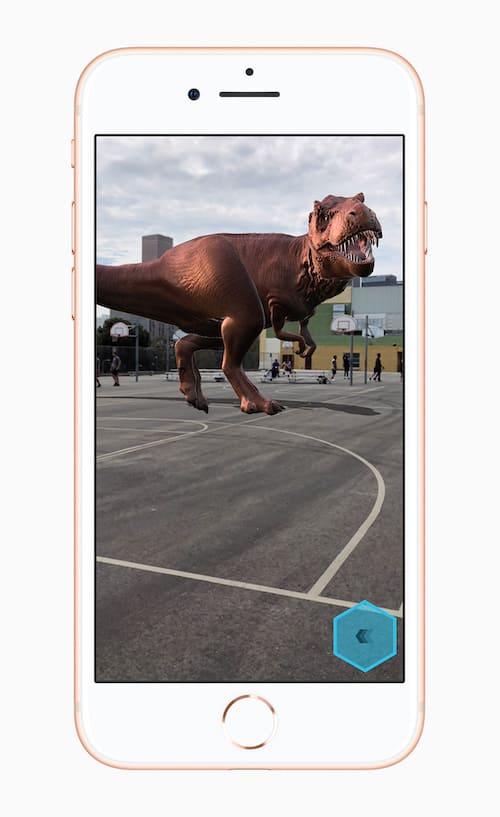

The Neural Engine also helps with everything Apple does in AR. Whether it’s allowing the Measure app to give you the dimensions of an object or projecting a 3D model into your environment through the camera’s viewfinder, that’s ML. If it’s ML, chances are the Apple Neural Engine boosts it tremendously.

This means, of course, that Apple’s future innovations hinge on the Neural Engine. When Cupertino finally unleashes its mixed reality headset on the world, the Neural Engine will be a big part of that technology.

Even further, Apple Glass, the rumored augmented reality glasses expected to follow the mixed reality headset, will depend on Machine Learning and the Neural Engine.

So, from the simple act of recognizing your face to unlock your iPhone to helping AR transport you to a different environment, the Neural Engine is at play.