Camera Control on the iPhone 16 lineup offers an intuitive way to open the Camera app, capture photos, and control various camera settings on the fly. However, apart from photography, there wasn’t any utility of this touch-sensitive button up until now. With the recent iOS 18.2 update, Apple has rolled out several new and much-needed changes that finally make Camera Control the feature it was always meant to be.

Starting today, you’ll also be able to use Camera Control to access Apple’s new Visual Intelligence tool. Here’s an in-depth guide explaining what this new tool can do and how you can use it on your iPhone.

What is Visual Intelligence, and How Does it Work?

Visual Intelligence is a new search tool designed to help iPhone 16 users learn about the world around them using just their camera. Similar to Google Lens or Circle to Search, just point your camera at objects, text, or scenes and it’ll pull relevant information fast. It makes it easier to identify items, translate text, or learn about a subject with the power of Apple Intelligence.

Visual Intelligence uses a combination of Apple Intelligence and ChatGPT’s vision capabilities to deliver search results. You can access this tool with just a few taps, even from the Lock Screen, by using the new Camera Control button on the iPhone 16. It then scans what the camera sees to fetch useful results from the web.

What Can I Do With Visual Intelligence?

With visual Intelligence, looking up information about anything is as simple as pointing your iPhone at them. If you’re unsure about how it can help you, here’s a quick list of all the things it can do:

- Use ChatGPT’s vision capabilities to get answers to questions you might have about something.

- Perform a quick reverse image search of the subject to find visually similar images or products.

- Identify plants and animals or landmarks and paintings around you to learn more about them.

- Automatically detect text from objects and have it translated, summarized, or read out loud.

- Detect important dates from a poster or invitation and create new calendar events for it.

- Point your iPhone at a shop, cafe, or restaurant to check its reviews, timings, and more.

The sky’s the limit when it comes to what you can do with Visual Intelligence. Apart from the use cases listed above, it can help you with a bunch of other tasks. It can solve mathematical equations for you, look up nutritional values for what you’re eating, copy text from a poster, scan QR codes, and more.

How to Use Visual Intelligence on iPhone 16

As long as you’ve enabled Apple Intelligence (and aren’t facing issues with the Camera Control button), you’ll have access after installing iOS 18.2.

NOTE

NOTE

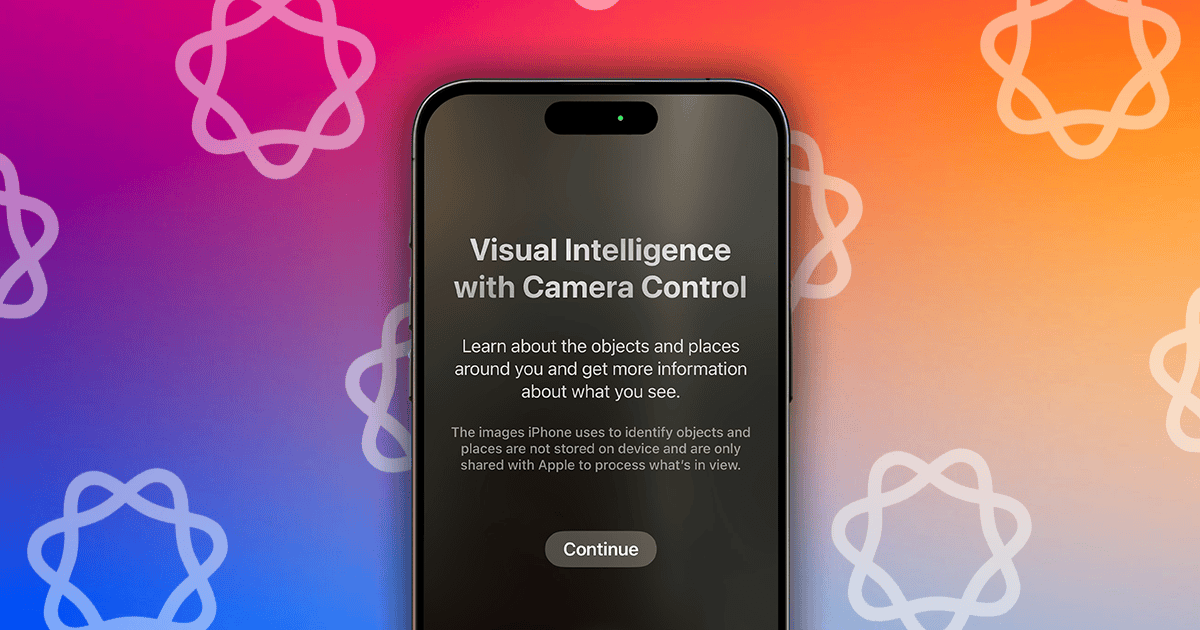

Now, whenever you want to use Visual Intelligence, press and hold the Camera Control Button on your iPhone 16. You’ll be greeted with a splash screen during your first time. Simply tap Continue and point your iPhone towards the subject. Then, tap any one of these options:

- Ask: Tap this option to chat with ChatGPT and get answers to queries related to the subject.

- Capture: Tap this button to capture a photo of the object and access more context-aware features.

- Search: Tap this option to perform a Google reverse image search and pull up visually similar results.

NOTE

NOTE