Apple’s iOS 18.4 introduces an upgraded Visual Intelligence feature, aiming to challenge Google Lens. While Google Lens has been a dominant force, Apple’s latest iteration integrates ChatGPT for deeper insights. But does it match up in real-world use? I tested both to find out.

Activation & Accessibility

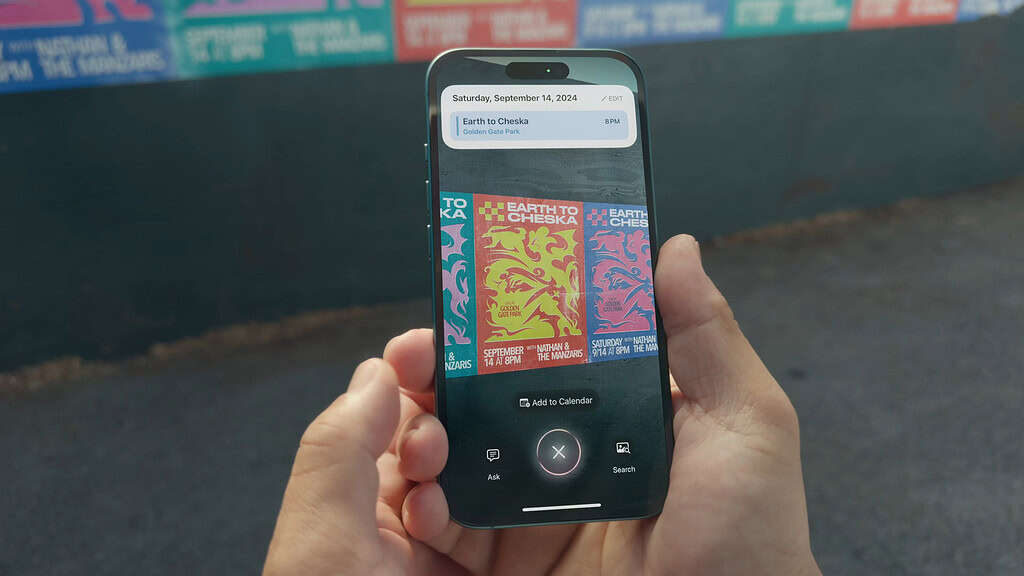

Apple has integrated Visual Intelligence deeply into the iPhone 16 series. The most convenient way to activate it is by long-pressing the Camera Control button, making it feel like an extension of the camera rather than a separate feature. Alternatively, you can set up a back-tap gesture for activation, similar to Google’s Quick Tap.

However, this tight integration has a major downside: Visual Intelligence is exclusive to iPhone 16 models and a limited subset of iPhone 15 Pro users. If you own an older iPhone, you’re out of luck.

Google Lens, on the other hand, is universally accessible. It works on nearly all Android devices and even on iPhones via the Google app. While it lacks a dedicated button like Apple’s Camera Control, you can easily launch it from the Google Assistant widget or the Google app. This broad accessibility makes it a clear winner in terms of reach.

Search Accuracy & Real-World Performance

To compare their performance, I took my iPhone 15 Pro and Pixel 7 Pro for a test in real-world scenarios.

1. Business Recognition: Apple Wins on Automation

I stopped at a local barbershop to check its hours. Apple’s Visual Intelligence instantly recognized the store and pulled up its details (Yelp reviews, photos, and quick action buttons for calling or visiting the website) all without requiring an extra tap. Google Lens, while accurate, required an additional step: I had to press the shutter button before it provided business details.

2. Plant Identification: A Mixed Bag

I tested both by pointing them at a plant. Apple quickly identified it as a tomato plant and provided care tips via ChatGPT. Google Lens, however, misidentified it as a jade plant. While both provided relevant results, Apple’s integration with ChatGPT added a practical touch by giving actionable advice rather than just identification.

3. Translation: Google Still Reigns Supreme

Google Lens’s built-in translation tool is a game-changer. I tested it on an Arabic menu, and it instantly provided clear, accurate translations. Apple, while capable of identifying text, lacks a dedicated translation function, requiring me to manually copy the text into a translation app. This is a significant shortcoming compared to Google’s seamless experience.

Unique Features: ChatGPT vs. Google’s Tools

Apple’s biggest strength is its Ask feature, powered by ChatGPT. Unlike Google Lens, which primarily focuses on identification and search, Visual Intelligence allows users to ask contextual questions. For example, after identifying a flower, I asked how to care for it, and ChatGPT provided specific instructions. Similarly, when I scanned a math equation, it walked me through the solution step by step.

Google Lens takes a different approach by offering dedicated tools for various tasks, including:

- Homework Helper: Ideal for solving equations.

- Google Translate: A must-have for travelers.

- Shopping Assistant: Quickly finds where to buy an item online.